The topic of personality tests has long been contentious. Critics have questioned their validity and dismissed them as pseudoscience — something better suited for pastime than for recruitment. Conversely, some individuals take them so seriously that they insist only experts should interpret results. Most people seem to take a more moderate position between these two extremes, though.

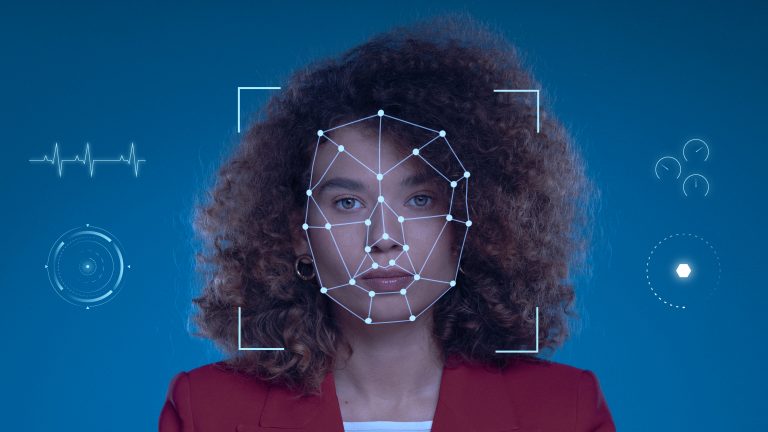

With the omnipresence of AI these days, personality assessments have become far more accessible and, therefore, tempting to use in recruiting processes. There is an abundance of AI-powered personality tests available online, and now we can leverage AI also to enhance analysis, moving beyond the relatively simple scoring systems of traditional tests.

This article explores how AI enhances personality testing while highlighting the ethical and practical pitfalls employers should avoid.

What’s great about AI in personality tests

AI can be applied to personality assessments in powerful ways. It unlocks new possibilities for assessing personalities that go beyond traditional frameworks such as the Enneagram or DISC.

AI can automatically interpret candidates’ responses to psychometric or situational questions, using Natural Language Processing (NLP) to analyze open-text answers for tone, word choice, and sentiment — helping infer traits such as conscientiousness or empathy.

AI can even identify indicators of consistency, honesty, and the tendency to provide socially desirable answers.

One-way video interview software HireVue goes further and analyzes non-verbal cues. It can assess microexpressions, eye contact, and tone of voice to infer engagement or confidence. There has been some controversy about HireVue, though, as the Washington Post reported, mainly due to a perceived lack of explainability of the tool.

Simulating realistic work scenarios

Another way that AI surpasses traditional frameworks is that it can create interactive experiences that reveal personality traits. Candidates interact with an AI-driven environment that mimics real work challenges — such as team conflicts and time pressure. AI agents can simulate coworkers or clients to test emotional intelligence and problem-solving style. AI can also analyze behavior during gamified tasks to infer underlying traits.

Assisting in personality interpretation

AI also serves as a decision-support system for HR professionals.

For example, it can cross-reference personality test results with CV data or work samples to produce a more comprehensive and nuanced understanding of an individual’s personality profile. Additionally, it can convert raw data into concise personality profiles matched against job requirements. AI can even predict how a candidate’s personality aligns with that of existing team members.

Moreover, AI can generate reports highlighting strengths, motivations, and potential red flags. Finally, adaptive scoring models enable machine learning systems to refine their predictions of personality traits over time based on actual job performance outcomes.

Bye-bye bias?

A longstanding criticism of personality tests is that they allow for cultural bias — the Myers-Briggs Type Indicator (MBTI), for example, would mirror the American preference for extroverts. In this sense, AI brings an opportunity for improvement, as it offers an affordable and effective way to adjust for different cultural contexts.

Firstly, AI can help translate and localize assessments to avoid idiomatic expressions and culturally specific norms that might disadvantage certain groups.

Secondly, AI makes it easier to account for cultural variability. For example, consider two equally confident individuals: one from a Western background, and another from an Eastern background. They might express that confidence differently due to cultural norms, and end up scoring differently. AI-driven item response analysis can detect when these variations are due to culture rather than personality differences.

And here’s what to avoid

❌ Hiding that it’s a personality test ❌

When administering an AI-based personality test, be transparent about it. Clearly state that the assessment is AI-based. Emphasize that it’s not infallible and should serve as a complement in the recruitment process — not as the sole deciding factor.

FedEx went viral when a candidate who applied for a software role shared on Reddit about his bad experience with a gamified, AI-based personality test. The applicant says the personality assessment wasn’t disclosed as such until the results were delivered. Surprise, surprise.

The personality test administered by the mailing giant features a blue humanoid character and is used by some other big employers out there. It is provided by a company named Paradox.ai. “I was instructed to pick whether the character in the image represented ‘me’ or ‘not me’. The images were bizarre and kind of funny to look through,” the candidate recalled. The process concluded with the AI presenting results based on the Five-Factor Model (FFM): the test-taker’s levels of openness, conscientiousness, extraversion, agreeableness, and neuroticism — the assessment is also known by the acronym OCEAN.

❌ Offering unsolicited feedback ❌

The lack of transparency in the process was only part of what struck a chord with the candidate — and with the 56,000 people who upvoted the post on Reddit. The other issue was the feedback. Not only was it unsolicited, but it was also pretty harsh — including the remark that the applicant tolerates “mediocre work from others.” As a result of the bad experience, the candidate withdrew their application.

In an article for The Guardian, writer Anna Spargo-Ryan expressed similar discomfort with a recruiting process she experienced. She first completed a kind of pre-interview with a chatbot. Interacting with the robot wasn’t an issue for her; the gist was that later she received automated, unsolicited “personality insights” via email — what’s worse, on a Friday evening. “Nothing in the chatbot process had mentioned personality ‘insights’. I hadn’t opted into anything extra. And to be honest with you, being underemployed has not been terrific for my self-esteem, so I wasn’t exactly craving a Friday-night character assessment by AI.” She argues that receiving uninvited, automated feedback can harm job seekers’ mental health.

She says her discomfort led her to visit the AI platform’s website, where she noted that it markets the feedback emails as a value-add — after all, don’t job hunters complain all the time about not hearing back after applying? Well, they may be overlooking a factor here: who candidates want to hear from.

Applicants typically want to hear back from the recruiter or the hiring manager. Not every jobseeker may be interested in receiving feedback from an AI, let alone outside traditional work hours.

(A quick parenthesis here: LinkedIn is filled with complaints from jobhunters who applied for a role just to receive automated rejections at unsuitable times — such as Christmas Eve. Automation is a great marvel of the modern world, but there are moments when getting automated negative feedback hits hard.)

Employers should ensure AI tools respect candidate consent and timing preferences. Always ask whether candidates want to receive personality insights, and if they do, configure systems so messages aren’t sent outside business hours.

Wrapping up

According to Fortune, hiring managers are increasingly turning to personality tests alongside skills tests as pre-employment exams, and achieving excellent outcomes such as reduced time-to-hire and fewer mis-hires. And AI opens up new avenues for assessing personalities.

AI provides deeper, data-driven insights that go beyond traditional frameworks — analyzing verbal and nonverbal cues to infer traits and predict job fit, and simulating realistic work scenarios. AI can also reduce cultural bias by localizing assessments and adjusting for different cultural contexts.

When administering an AI-based personality test, don’t hide behind the AI platform: be clear with the candidate that the questions they’re being asked are part of a personality test. And refrain from offering unsolicited feedback.

In a previous article, we examined the pros and cons of using personality tests in the recruiting process. Amid all the controversy surrounding the topic, one of the key takeaways from that article was that using a personality assessment for hiring, when it is not specifically recommended for that purpose, is not advisable. Importantly, this rule still applies when AI is added to the equation.

Another key takeaway was never relying solely on personality tests for hiring decisions — of course, this still applies to AI-based personality tests. Incorporate personality assessments into a comprehensive hiring process that includes interviews and reference checks. Professional recruiters should give more weight to in-depth interviews in the overall evaluation of a candidate; however, if a test result aligns with observations from the interview process, it may provide additional insight to support decision-making.

Finally, it should be noted that preventing bad experiences helps protect your employer brand. Candidates who have a negative experience are more likely to voice their complaints on social media. They may also be discouraged from applying again to the employer — and might even refrain from purchasing goods and services from said employer in their personal lives.

Since you’ve made it this far…

Why not get even more of our insights? Join the TechTalents Insights community and get our newsletter every other week.